Issue No. 7

Grab a cup of coffee and sit down with the newsletter to learn about some BT project spotlights, a new LLM release with massive context length, VR language learning, and more!

But first, what is TechNews.Bible?

TechNews.Bible is intended to be a community resource that enables Bible Translation technologists to discover, learn, share, and collaborate. Read about the backstory and intentions of the newsletter here.

∴ BT Project Spotlights

The Bible Aquifer

The vision for the Bible Aquifer project is described in a new article from the ETEN Innovation Lab Substack. The idea of an open and interlinked Bible knowledge repository continues to excite me. The Bible Well app demonstrates the current capabilities of the Aquifer.

Project Accelerate

Project Accelerate is a cross-organization collaboration aiming to quickly test and deliver innovative tools for Bible translators. The base application is called Codex, which is a fork of VS Code. Codex is a simple deployment target for Open Components and other tools built with web technologies. Another part of the project, Translator's Copilot, will use Codex to explore AI-powered assistance for Translators.

∴ Upcoming Events

OCE Hackathon 2024

The Open Components Hackathon is underway! The event consists of two major parts. The Learnathon (Feb 26 - Mar 1) features daily learning sessions for topics such as "Accessing the Bible Aquifer" and Prediction Guard. The Hackathon (March 4 - 8) portion will allow self-forming teams to explore new solutions together.

Missional AI Conference

Registration is open for the Global Missional AI Summit, which will take place in early April this year. The conference intends to focus on leveraging AI to accelerate Bible translation and Kingdom growth. I'm told several great speakers are already confirmed for the event.

∴ AI News + Research

Self-Rewarding Language Models

Researchers with Meta and NYU introduce a technique enabling language models to guide their own training without relying on Reinforcement Learning from Human Feedback. The results look very promising -- by some measures, they can outperform Claude 2, Gemini Pro, and GPT-4. Read the paper.

Gemini Pro 1.5 announced with translation example

Google Bard is now Gemini. Along with the name change, the release of Gemini 1.5, Google's next model generation, was announced. This new generation uses a Mixture-of-Experts architecture. The technical paper describes a context window that can support up to 10 Million tokens (and early feedback makes performance sound very promising). With particular relevance to Bible translation, the paper mentions the proven ability of the model to translate material into a low-resource language using a grammar, dictionary, and parallel sentences, all in the context window:

Finally, we qualitatively showcase the in-context learning abilities of Gemini 1.5 Pro enabled by very long context: for example, learning to translate a new language from a single set of linguistic documentation. With only instructional materials (500 pages of linguistic documentation, a dictionary, and ≈ 400 parallel sentences) all provided in context, Gemini 1.5 Pro is capable of learning to translate from English to Kalamang, a language spoken by fewer than 200 speakers in western New Guinea in the east of Indonesian Papua, and therefore almost no online presence. Moreover, we find that the quality of its translations is comparable to that of a person who has learned from the same materials.

In a footnote, the quality of the results is qualified:

This is not to say that the task is solved; both the human and Gemini 1.5 Pro make avoidable errors, though typically of different kinds. The human errors tend to be retrieval failures, where they pick a suboptimal phrase because they could not find the ideal reference (because rereading the entire set of materials for each sentence is infeasible for a human). The model failures tend to be inconsistent application of rules, like that the word “se” is pronounced “he” after a vowel (this alternation is described in the phonology section of the grammar and reflected in the additional parallel sentence data, but the model may be confused by the fact that the underlying “se” form is used as the gloss throughout the examples within the grammar), or lack of reflection, like that the word “kabor”, although it is defined as “to be full” in the dictionary, is only used for stomachs/hunger in all examples of its use.

For more details on the translation use case, see page 13 of the technical paper.

∴ BT Domain Knowledge for Technologists and Innovators

The Statistical Restoration (SR) Greek New Testament

The Center for New Testament Restoration has released a Greek New Testament that uses transparent statistical analysis to make text-critical decisions. Read the paper. A physical edition can be purchased as well. If you aren't familiar with the excellent work that the CNTR does, check out their New Testament Collation. I've found it to be beneficial for personal study.

Language: a bridge or a barrier to people flourishing

With real examples, this article walks through some of the difficulties that speakers of minority languages experience. It's a great reminder of how spoken language is connected to a wide array of other opportunities in life (economic, educational, social, etc.) As someone without minority language experience, I found it eye-opening. From Michel Kenmoge.

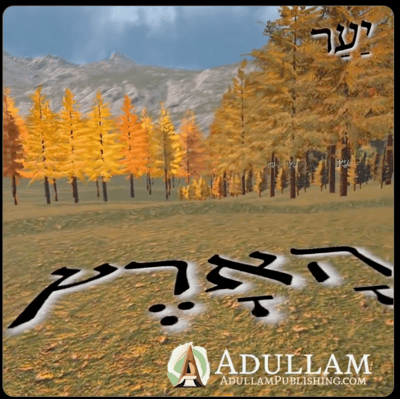

A VR Demo for Langauge Learning

Now this is a "spatial computing" application I can get behind! This video demonstrates using virtual reality to bring spatial embodiment to language learning (see the screenshot below). The demo video uses Hebrew as an example. Watch the video. As I increasingly become convinced of the benefits of total physical response in language learning, applications like this are all the more exciting. From Adullam Media & Publishing.

Language learning is one (incredibly cool!) application, but how might VR/AR be used for Bible study or internalization and familiarization?

∴ Productivity Tip

Google Bard Gemini has access to YouTube videos. What does this mean? It means you can reference YouTube videos via URL in a prompt, and the model can work with the video as it can with text content. One powerful application of this is summarizing YouTube content. You can use the following prompt to quickly get a summary of any YouTube video (credit to Brian Roemmele):

Please perform a detailed summary of this video. Break it down in sequential fashion and use bullet points and headings of key points. The YouTube video is: {URL}

I've found that this works for some YouTube videos but not others. When it does work, it can be quite useful. YouTube video summaries can play various roles in your knowledge management workflow. They can function as a filter to assess if longer videos are worth your time. You can also use them to take notes on videos with important ideas. How might you apply this fantastic feature in your daily work?

As always, thanks for reading! If you have questions, ideas, or content worth sharing, please drop me a line.